The dataset could not be loaded because the splits use different data file formats, which is not supported. Read more about the splits configuration. Click for more details.

Error code: FileFormatMismatchBetweenSplitsError

Need help to make the dataset viewer work? Make sure to review how to configure the dataset viewer, and open a discussion for direct support.

Dataset Card for GEMBench dataset

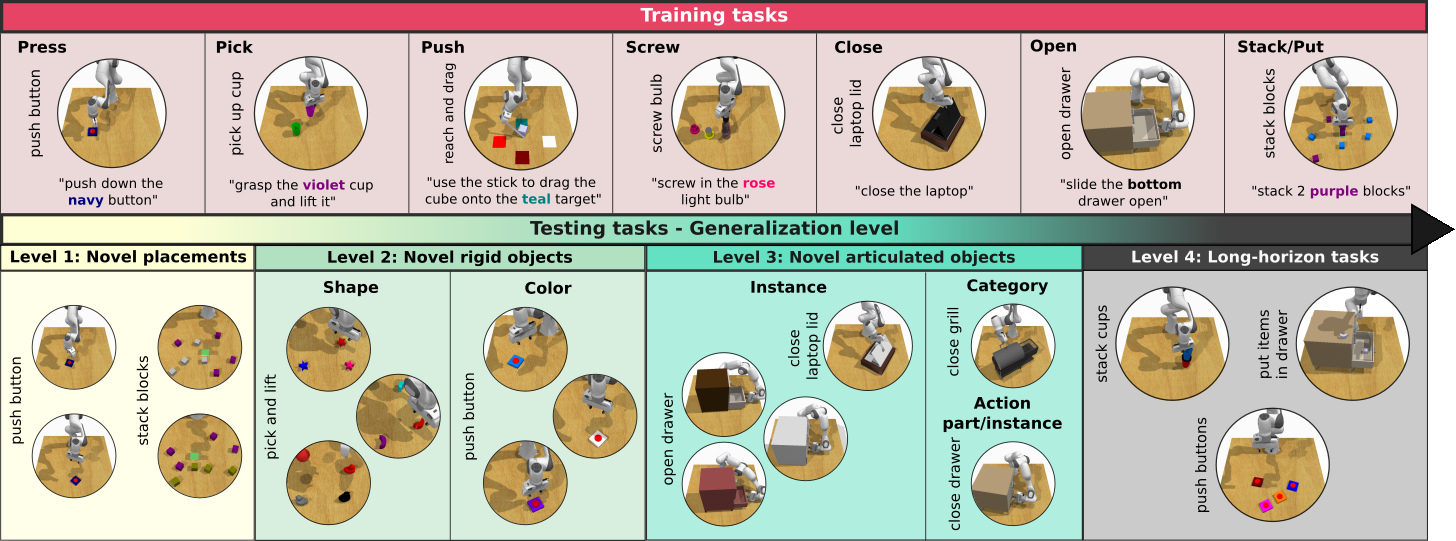

💎 GEneralizable vision-language robotic Manipulation Benchmark Dataset

A benchmark to systematically evaluate generalization capabilities of vision-and-language robotic manipulation policies. Built upon the RLBench simulator.

💻 GEMBench Project Webpage: https://www.di.ens.fr/willow/research/gembench/

📈 Leaderboard: https://paperswithcode.com/sota/robot-manipulation-generalization-on-gembench

Dataset Structure

Dataset structure is as follows:

- gembench

- train_dataset

- microsteps: 567M, initial configurations for each episode

- keysteps_bbox: 160G, extracted keysteps data

- keysteps_bbox_pcd: (used to train 3D-LOTUS)

- voxel1cm: 10G, processed point clouds

- instr_embeds_clip.npy: instructions encoded by CLIP text encoder

- motion_keysteps_bbox_pcd: (used to train 3D-LOTUS++ motion planner)

- voxel1cm: 2.8G, processed point clouds

- action_embeds_clip.npy: action names encoded by CLIP text encoder

- val_dataset

- microsteps: 110M, initial configurations for each episode

- keysteps_bbox_pcd:

- voxel1cm: 941M, processed point clouds

- test_dataset

- microsteps: 2.2G, initial configurations for each episode

🛠️ Benchmark Installation

- Create and activate your conda environment:

conda create -n gembench python==3.10

conda activate gembench

- Install RLBench Download CoppeliaSim (see instructions here)

# change the version if necessary

wget https://www.coppeliarobotics.com/files/V4_1_0/CoppeliaSim_Edu_V4_1_0_Ubuntu20_04.tar.xz

tar -xvf CoppeliaSim_Edu_V4_1_0_Ubuntu20_04.tar.xz

Add the following to your ~/.bashrc file:

export COPPELIASIM_ROOT=$(pwd)/CoppeliaSim_Edu_V4_1_0_Ubuntu20_04

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$COPPELIASIM_ROOT

export QT_QPA_PLATFORM_PLUGIN_PATH=$COPPELIASIM_ROOT

Install Pyrep and RLBench

git clone https://github.com/cshizhe/PyRep.git

cd PyRep

pip install -r requirements.txt

pip install .

cd ..

# Our modified version of RLBench to support new tasks in GemBench

git clone https://github.com/rjgpinel/RLBench

cd RLBench

pip install -r requirements.txt

pip install .

cd ..

Evaluation

Please, check 3D-LOTUS++ official code repository for evaluation:

https://github.com/vlc-robot/robot-3dlotus?tab=readme-ov-file#evaluation

Citation

If you use our GemBench benchmark or find our code helpful, please kindly cite our work:

BibTeX:

@inproceedings{garcia25gembench,

author = {Ricardo Garcia and Shizhe Chen and Cordelia Schmid},

title = {Towards Generalizable Vision-Language Robotic Manipulation: A Benchmark and LLM-guided 3D Policy},

booktitle = {IEEE International Conference on Robotics and Automation (ICRA)},

year = {2025}

}

Contact

- Downloads last month

- 53